“The tragedy of life is in what dies inside a person while she or he lives – the death of genuine feeling, the death of inspired response, the awareness that makes it possible to feel the pain or glory of other people in yourself.” —Norman Cousins

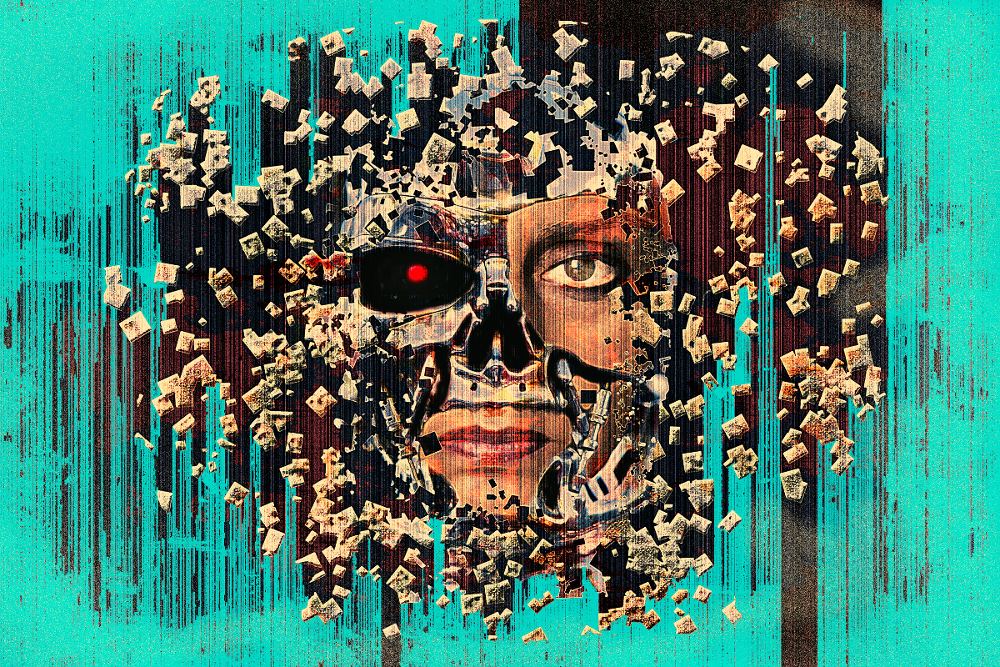

We just bought an Amazon Echo, so we would have the facts of the world at our simple command. “Alexa, what’s the weather?” “Alexa, what’s the news?” “Alexa, play Leonard Cohen.” It’s truly amazing how far Artificial Intelligence (AI) has come by combining multiple algorithms to answer a whole range of questions. Artificial emotions? Not so much.

I was so impressed by Alexa’s ability to search the world databases to find answers to random inquiries that I decided to test its ability (yes, Alexa is an “it,” not a “her”) to respond accurately to emotional stimuli.

“Alexa, I feel happy today because it’s beautiful outside.” Alexa: “Hmm, I’m not sure about that.”

“Alexa, I’m really angry because people are treating me unfairly.” Alexa: “Hmm, I don’t know about that.”

“Alexa, I’m really sad because a friend is in very bad health.” Alexa: “Hmm, I’m not sure about that.”

“Alexa, I’m really scared because life seems so uncertain and threatening these days.” Alexa: “Hmm, I don’t know about that.”

“Alexa, I’m confused because different news sources report entirely different views on the same subjects.” Alexa: “Hmm. I’m not sure about that.”

As you read in the “interactions” above, I shared stories related to the five feeling categories that cover almost all emotional states: Up, Down, Anger, Fear, and Confusion.

Alexa was not able to provide an interchangeable response to any of the stories. More importantly, I’m not sure it would be a good thing if Alexa were programmed to engage in artificial empathy with the person sharing his or her joy or angst.

I have no skills in computer programming or in AI, but it seems to me it would be fairly easy to program these devices to respond with artificial emotions. In the chart below, you can see that feelings can be easily summarized by categories and intensity. I have provided examples of feeling words in each of the 15 boxes, but I would encourage you to expand your repertoire of responses by adding words that you might use to make an empathic response to a stranger, loved one, or friend.

|

Up |

Down |

Anger |

Fear |

Confusion |

|

|

High Intensity |

Ecstatic Thrilled Exhilarated |

Suicidal Depressed Devastated |

Enraged Murderous Furious |

Terrified Panicky Petrified |

Disoriented Torn apart Conflicted |

|

Medium Intensity |

Happy Joyful Glee |

Lonely Discouraged Disappointed |

Mad Angry Vengeful |

Afraid Anxious Scared |

Lost Confused Split |

|

Low Intensity |

Content Glad Satisfied |

Bad Sad Bummed |

Irritated Annoyed Frustrated |

Nervous Apprehensive Guarded |

Unsure Uncertain Mixed Up |

Given that feelings can be identified by category and intensity, it doesn’t seem like a big leap to equip AI tools to listen to what a person says, place the emotion in one of the 15 boxes, and then simply choose an interchangeable response to the statement. Voila! An artificial emotional response.

Thus, in response to: “Alexa, I feel happy today because it’s beautiful outside.” Alexa might search the database and say: “You feel joyful because the weather is nice today.” Technically, that would be considered an interchangeable response because it was an accurate demonstration of understanding to feeling and content. And that’s the problem not only with AI, specifically in computers, but with AE (artificial emotions) in humans generally.

We may give technically correct responses, but they are not necessarily genuinely generated.

Think about the “responses” you get from well-trained customer service representatives when you call in with a complaint. I don’t know about you, but the responses feel awfully mechanical and automated to me. In fairness, if I were doing that job, I’m sure my responses wouldn’t exactly be heartfelt either.

In more than 20 years and 10,000 hours of experience training people in interpersonal skills, I fought for deeper empathy and connection and fought against robotic responses that simply regurgitated words without genuinely caring about the person to whom the response was directed.

My proposal above for using algorithms to respond to human emotions takes emotional artificiality to an even lower level. At a minimum, people who make the effort to demonstrate understanding to another human have to do the work to formulate a response independent of their level of authenticity or compassion. AI is simply searching databases for content. There is no attempt to respond to meaning.

After all, it’s the internet of things (IOT), not the internet of people that’s all the rage these days.

Genuine, heartfelt, human connection is one of the most critical needs in today’s automated society.

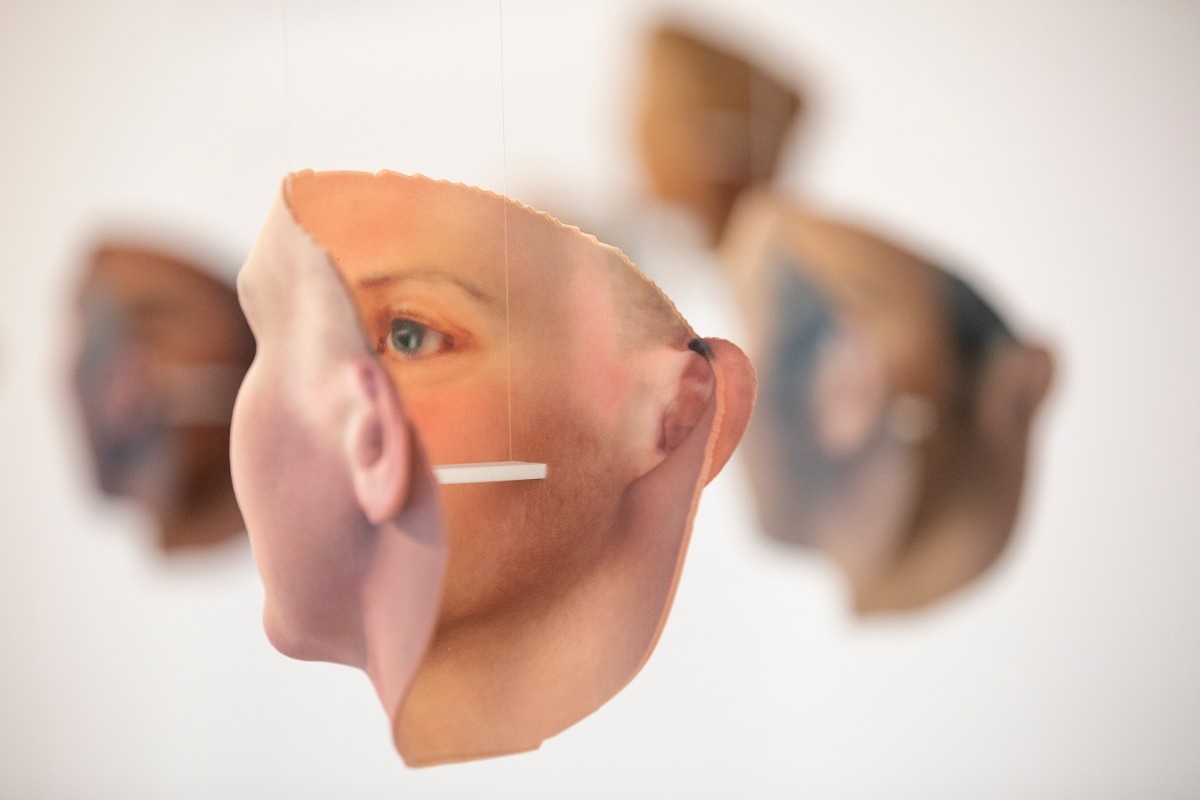

We need face-to-face, eye-to-eye, heart-to-heart intimacy to deal with the dehumanization that surrounds us.

Artificiality dooms us to become more machine-like in our human engagements. Genuine empathy gives us a chance to deal with the suffering and struggles of our fellow beings in humane and meaningful ways.

I recently read the book Refugee by Alan Gratz, an engaging and important book written primarily for middle-school children to help them understand the plights of 70 million refugees and displaced persons around the world. Gratz shares the stories of three kids. The first from Nazi Germany in the 1940’s, the second from Cuba in the 1950’s and 1960’s, and the third from Syria today. All three children embark on harrowing journeys in search of safety at great risk to their lives. I can’t imagine anyone reading this book and not being deeply impacted by the gripping stories. If books like this don’t compel us to connect with loving kindness, I’m not sure what will. Middle school kids are quickly caught up in the stories as they unfold. Hopefully, they will be inspired to take action on the refugee crisis around the world.

For a domestic perspective, I just read Dopesick by Beth Macy which describes in detail how dealers, doctors, and drug companies have addicted America.

This book shares the heart wrenching stories of several families who grappled with the rehab, relapse, and ruination of their family members.

Macy reports brilliantly and thoroughly about the surge in opioid addiction over the past two decades.

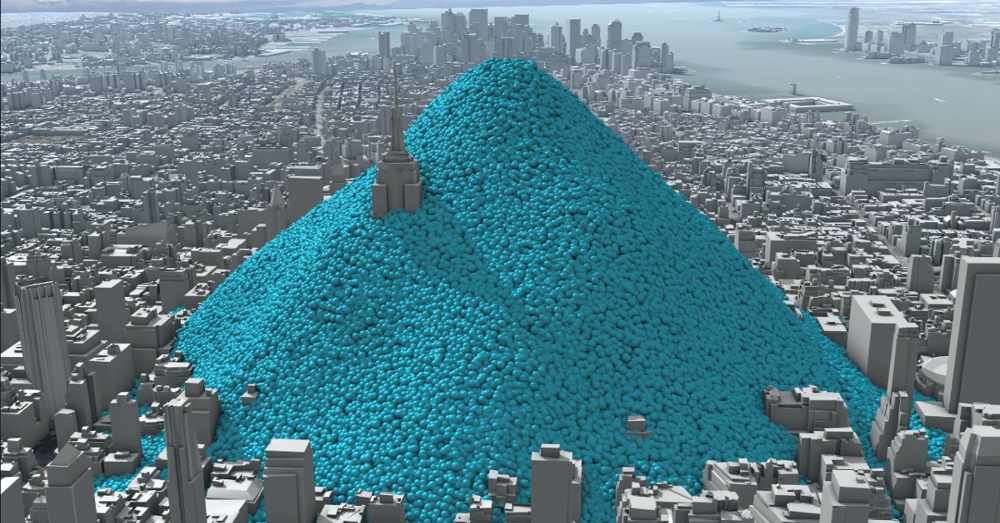

Drug overdose has overtaken the lives of 300,000 Americans over the past fifteen years, and experts now predict that 300,000 more will die in the next five years. It is now the leading cause of death for Americans under the age of 50.

I reference Dopesick here because it seems to me that the opioid epidemic is caused as much by emotional pain as it is by physical pain.

People are increasingly depressed, anxious, isolated, and alienated. We not only need laws to combat this scourge, we also need stronger communities, compassionate hearts and genuine emotional connections. We won’t find these cures on our smartphones, tablets, computers, or internet searches. There are no AI answers. We will only find the cure if we look deep inside and craft authentic responses.

We have made enormous progress in neural networks, gene mapping, medical technology and, yes, artificial intelligence. I wish we could make equivalent progress in finding the pathways to our hearts and the connections to our fellow humans. Developing genuine empathy and authentic emotions would be a good place to start. May it be so.

Well done Ricky! Thank you